How to install and set up Incus on a cloud server – Mi blog lah!

Incus is a manager for virtual machines and system containers.

A virtual machine is an instance of an operating system that runs on a computer, along with the main operating system. A virtual machine uses hardware virtualization features for the separation from the main operating system.

A system container is an instance of an operating system that also runs on a computer, along with the main operating system. A system container uses, instead, security primitives of the Linux kernel for the separation from the main operating system. You can think of system containers as software virtual machines.

In this post we are going to see how to install and set up Incus on a cloud server. While you can install all sort of different services together on a cloud server, it gets messy. A single failure can bring down the whole server. We are doing this in style. Separate services will stay in different instances, all managed by Incus.

Prerequisites

This post assumes that

- You are familiar with common Linux administration.

- You have a basic knowledge of using Incus.

Selecting a cloud server

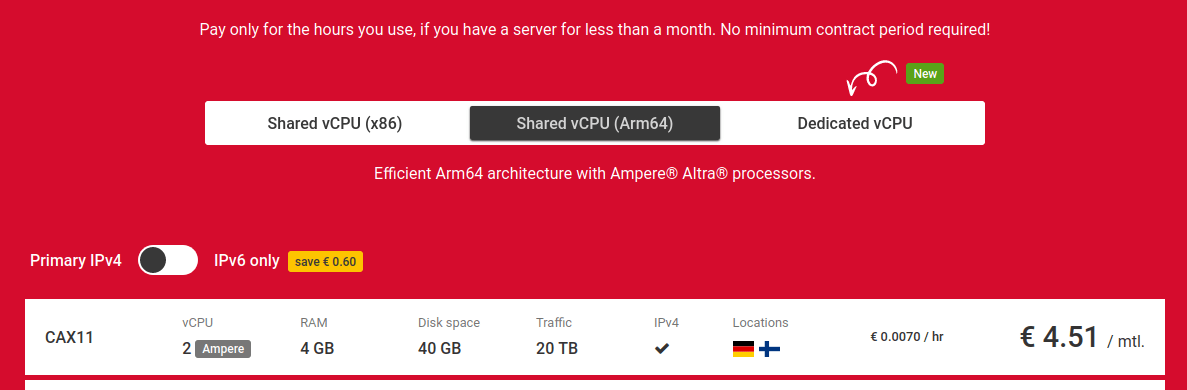

There are companies that provide cloud servers. For this post we are going to use cloud servers from Hetzner, which offers truly thrifty cloud hosting. No, it’s not me that says truly thrifty cloud hosting. They use that phrase in the <title></title> tag of their cloud server page.

We are going to go thrifty and select the Ampere (ARM) cloud server with 2 virtual CPUs, 4GB RAM and 40GB storage. It’s the first time I am using this line of servers and I am writing the post as I go along with launching the cloud server.

While selecting the features of the CAX11 cloud server, I got the breakdown of the price. The CAX11 cloud server is 4.08€/month, and the IPv4 address is 0.62€/month. If you do not require an IPv4 address and you are happy with the free IPv6 address, you can save the 0.62€/month. For this post I have added IPv4. Total cost 4.70€/month.

I have not created the server yet. There is the option to add separate cloud storage (a volume) to the cloud server. I am adding that and I am putting in there the storage pool for Incus. The cloud server already has 40GB disk space which is more than enough for the base operating system. In an old post I showed how to repartition the 40GB disk space so that Incus can use instead. For this post, we are getting a 30GB volume.

Between the options for the operating system, we currently can choose between Debian and Ubuntu. There are such ready-made packages for those operating systems, supported by Zabbly. I selected to install Ubuntu 22.04.

Complete the rest of the configuration as you wish and click to Create the cloud server.

Setting up the cloud server

I am installing Incus from the Zabbly package repository. I am repeating the commands for reference.

- Verify there is a

/etc/apt/keyrings/directory, download the Zabbly repository key and install it.sudo mkdir -p /etc/apt/keyrings/

sudo curl -fsSL https://pkgs.zabbly.com/key.asc -o /etc/apt/keyrings/zabbly.asc - Create a file, let’s name it,

repo.sh, with the following contents.echo "Enabled: yes"

echo "Types: deb"

echo "URIs: https://pkgs.zabbly.com/incus/stable"

echo "Suites: $(. /etc/os-release && echo ${VERSION_CODENAME})"

echo "Components: main"

echo "Architectures: $(dpkg --print-architecture)"

echo "Signed-By: /etc/apt/keyrings/zabbly.asc" - Run the following to create the repository configuration file.

sh repo.sh | sudo tee /etc/apt/sources.list.d/zabbly-incus-stable.sources

- Update the package list and install the

incuspackage (includes the server and the CLI client).sudo apt update

sudo apt install -y incus - Install the

zfsutils-linuxpackage for ZFS support. If you prefer to usebtrfsfor the storage pool, you do not need to install this package.sudo apt install -y zfsutils-linux

Initializing Incus

We take extra care for the allocated block device (30GB) so that it is in a state that Incus can use it to create the storage pool.

Preparing the block device

Hetzner has formatted and mounted the block device. We need to unmount it first and then possible wipe any signatures. Incus wants the block device to be unmounted, having no filesystem.

First unmount the partition.

# mount ... /dev/sda on /mnt/HC_Volume_100288921 type ext4 (rw,relatime,discard) # umount /dev/sda #

Then, remove the signature.

# fdisk /dev/sda Welcome to fdisk (util-linux 2.37.2). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. The device contains 'ext4' signature and it will be removed by a write command. See fdisk(8) man page and --wipe option for more details. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0xe193e35e. Command (m for help): w The partition table has been altered. Syncing disks.

Running incus admin init

We are ready to initialize Incus by running incus admin init.

# incus admin init

Would you like to use clustering? (yes/no) [default=no]:

Do you want to configure a new storage pool? (yes/no) [default=yes]:

Name of the new storage pool [default=default]:

Name of the storage backend to use (btrfs, dir, lvm, zfs) [default=zfs]:

Create a new ZFS pool? (yes/no) [default=yes]:

Would you like to use an existing empty block device (e.g. a disk or partition)? (yes/no) [default=no]: yes

Path to the existing block device: /dev/sda

Would you like to create a new local network bridge? (yes/no) [default=yes]:

What should the new bridge be called? [default=incusbr0]:

What IPv4 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]:

What IPv6 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]:

Would you like the server to be available over the network? (yes/no) [default=no]:

Would you like stale cached images to be updated automatically? (yes/no) [default=yes]:

Would you like a YAML "init" preseed to be printed? (yes/no) [default=no]: yes

config: {}

networks:

- config:

ipv4.address: auto

ipv6.address: auto

description: ""

name: incusbr0

type: ""

project: default

storage_pools:

- config:

source: /dev/sda

description: ""

name: default

driver: zfs

profiles:

- config: {}

description: ""

devices:

eth0:

name: eth0

network: incusbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

projects: []

cluster: null

Incus has been initiazlized and is ready to go. By default Hetzner does not create a non-root account, therefore we are running all the commands as root. On a production system we would prefer to create a non-root account, then add them to the incus-admin Unix group. By doing so, the non-root account would be able to perform all the incus commands without requiring root.

An overview of the Incus commands

The Zabbly packages of Incus place the commands into /opt/incus/bin/. Here is the full list.

root@ubuntu-incus-dev-4gb-fsn1-1:~# ls -l /opt/incus/bin/ total 230492 -rwxr-xr-x 1 root root 1618984 Dec 23 21:56 criu -rwxr-xr-x 1 root root 13750808 Dec 23 21:56 incus -rwxr-xr-x 1 root root 11849656 Dec 23 21:56 incus-agent -rwxr-xr-x 1 root root 9683136 Dec 23 21:56 incus-benchmark -rwxr-xr-x 1 root root 9682592 Dec 23 21:56 incus-user -rwxr-xr-x 1 root root 43200216 Dec 23 21:56 incusd -rwxr-xr-x 1 root root 11049696 Dec 23 21:56 lxc-to-incus -rwxr-xr-x 1 root root 67928 Dec 23 21:56 lxcfs -rwxr-xr-x 1 root root 12797024 Dec 23 21:56 lxd-to-incus -rwxr-xr-x 1 root root 94979520 Dec 23 21:56 minio -rwxr-xr-x 1 root root 47360 Dec 23 21:56 nvidia-container-cli -rwxr-xr-x 1 root root 1994336 Dec 23 21:56 qemu-img -rwxr-xr-x 1 root root 22753376 Dec 23 21:56 qemu-system-aarch64 -rwxr-xr-x 1 root root 36256 Dec 23 21:56 swtpm -rwxr-xr-x 1 root root 584360 Dec 23 21:56 virtfs-proxy-helper -rwxr-xr-x 1 root root 1898776 Dec 23 21:56 virtiofsd root@ubuntu-incus-dev-4gb-fsn1-1:~#

We will try the incus-benchmark tool. This is a benchmarking tool. Here is the default help page.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark

Description:

Benchmark performance of Incus

This tool lets you benchmark various actions on a local Incus daemon.

It can be used just to check how fast a given host is, to

compare performance on different servers or for performance tracking

when doing changes to the codebase.

A CSV report can be produced to be consumed by graphing software.

Usage:

incus-benchmark [command]

Examples:

# Spawn 20 containers in batches of 4

incus-benchmark launch --count 20 --parallel 4

# Create 50 Alpine containers in batches of 10

incus-benchmark init --count 50 --parallel 10 images:alpine/edge

# Delete all test containers using dynamic batch size

incus-benchmark delete

Available Commands:

delete Delete containers

help Help about any command

init Create containers

launch Create and start containers

start Start containers

stop Stop containers

Flags:

-h, --help Print help

-P, --parallel Number of threads to use (default -1)

--project string Project to use (default "default")

--report-file Path to the CSV report file

--report-label Label for the new entry in the report [default=ACTION]

--version Print version number

Use "incus-benchmark [command] --help" for more information about a command.

root@ubuntu-incus-dev-4gb-fsn1-1:~#

We can either init or launch or start or stop or delete instances. In the following we create 50 instances of the images:alpine/edge container image, at 10 instances at a time. The instances are not started. This gives an idea of disk throughput.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark init --count 50 --parallel 10 images:alpine/edge Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel architecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 Test variables: Container count: 50 Container mode: unprivileged Startup mode: normal startup Image: images:alpine/edge Batches: 5 Batch size: 10 Remainder: 0 [Jan 18 00:58:26.373] Importing image into local store: de834b5936ca94aacad4df1ff55b5e6805b06220b9610a6394a518826929a160 [Jan 18 00:58:28.544] Found image in local store: de834b5936ca94aacad4df1ff55b5e6805b06220b9610a6394a518826929a160 [Jan 18 00:58:28.544] Batch processing start [Jan 18 00:58:41.400] Processed 10 containers in 12.856s (0.778/s) [Jan 18 00:58:55.870] Processed 20 containers in 27.325s (0.732/s) [Jan 18 00:59:14.683] Processed 40 containers in 46.139s (0.867/s) [Jan 18 00:59:30.305] Batch processing completed in 61.760s root@ubuntu-incus-dev-4gb-fsn1-1:~#

How long does it take to start those 50 containers? About 2 containers per second.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark start Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel tecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 [Jan 18 01:04:51.346] Starting 50 containers [Jan 18 01:04:51.347] Batch processing start [Jan 18 01:04:52.043] Processed 2 containers in 0.696s (2.872/s) [Jan 18 01:04:52.988] Processed 4 containers in 1.642s (2.436/s) [Jan 18 01:04:54.994] Processed 8 containers in 3.647s (2.194/s) [Jan 18 01:04:59.480] Processed 16 containers in 8.133s (1.967/s) [Jan 18 01:05:08.578] Processed 32 containers in 17.232s (1.857/s) [Jan 18 01:05:18.218] Batch processing completed in 26.872s root@ubuntu-incus-dev-4gb-fsn1-1:~#

How about stopping them? A tiny bit longer than starting them.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark stop Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel tecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 [Jan 18 01:08:22.096] Stopping 50 containers [Jan 18 01:08:22.096] Batch processing start [Jan 18 01:08:23.295] Processed 2 containers in 1.199s (1.669/s) [Jan 18 01:08:24.487] Processed 4 containers in 2.391s (1.673/s) [Jan 18 01:08:26.917] Processed 8 containers in 4.821s (1.659/s) [Jan 18 01:08:31.890] Processed 16 containers in 9.794s (1.634/s) [Jan 18 01:08:41.803] Processed 32 containers in 19.707s (1.624/s) [Jan 18 01:08:53.534] Batch processing completed in 31.438s root@ubuntu-incus-dev-4gb-fsn1-1:~#

And deleting the stopped containers? About five containers per second.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark delete Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel tecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 [Jan 18 01:10:44.351] Deleting 50 containers [Jan 18 01:10:44.351] Batch processing start [Jan 18 01:10:44.960] Processed 2 containers in 0.609s (3.286/s) [Jan 18 01:10:45.294] Processed 4 containers in 0.943s (4.242/s) [Jan 18 01:10:46.086] Processed 8 containers in 1.735s (4.611/s) [Jan 18 01:10:47.505] Processed 16 containers in 3.153s (5.074/s) [Jan 18 01:10:50.483] Processed 32 containers in 6.131s (5.219/s) [Jan 18 01:10:53.400] Batch processing completed in 9.049s root@ubuntu-incus-dev-4gb-fsn1-1:~#

Let’s try again, this time launch those 50 containers, 10 at a time. It takes about 3 seconds for each container. On a 2-vCPU ARM64 server with 4GB RAM. The free command says that there are 280MB RAM still available.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark launch --count 50 --parallel 10 Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel tecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 Test variables: Container count: 50 Container mode: unprivileged Startup mode: normal startup Image: images:ubuntu/22.04 Batches: 5 Batch size: 10 Remainder: 0 [Jan 18 01:12:51.522] Importing image into local store: fe0be2b7f4d2b97330883f7516a3962524d2a37a9690397384d2bbf46d0da909 [Jan 18 01:12:52.478] Found image in local store: fe0be2b7f4d2b97330883f7516a3962524d2a37a9690397384d2bbf46d0da909 [Jan 18 01:12:52.478] Batch processing start [Jan 18 01:13:29.866] Processed 10 containers in 37.387s (0.267/s) [Jan 18 01:13:56.357] Processed 20 containers in 63.879s (0.313/s) [Jan 18 01:14:53.315] Processed 40 containers in 120.836s (0.331/s) [Jan 18 01:15:26.863] Batch processing completed in 154.385s root@ubuntu-incus-dev-4gb-fsn1-1:~#

Once again, we delete them while they are running (includes stop and delete). The total duration is similar to stop and delete done separately.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark delete Test environment: Server backend: incus Server version: 0.4 Kernel: Linux Kernel tecture: aarch64 Kernel version: 5.15.0-91-generic Storage backend: zfs Storage version: 2.1.5-1ubuntu6~22.04.2 Container backend: lxc Container version: 5.0.3 [Jan 18 01:26:44.458] Deleting 50 containers [Jan 18 01:26:44.458] Batch processing start [Jan 18 01:26:46.007] Processed 2 containers in 1.548s (1.292/s) [Jan 18 01:26:47.511] Processed 4 containers in 3.052s (1.311/s) [Jan 18 01:26:50.565] Processed 8 containers in 6.107s (1.310/s) [Jan 18 01:26:56.642] Processed 16 containers in 12.184s (1.313/s) [Jan 18 01:27:08.298] Processed 32 containers in 23.840s (1.342/s) [Jan 18 01:27:21.248] Batch processing completed in 36.789s root@ubuntu-incus-dev-4gb-fsn1-1:~#

By deleting the running containers, the server memory gets freed. The free command gives then a very good indication of the available free memory. A 4GB RAM server with Incus can easily accomodate 50 Alpine containers.

root@ubuntu-incus-dev-4gb-fsn1-1:~# free -h

total used free shared buff/cache available

Mem: 3.7Gi 510Mi 3.1Gi 4.0Mi 128Mi 3.1Gi

Swap: 0B 0B 0B

root@ubuntu-incus-dev-4gb-fsn1-1:~#

Stress testing

We have tested with 50 Alpine containers, let’s test with 10 Ubuntu containers. Will there be any free memory in the end? Yes, there’s 1GB of RAM still remains.

root@ubuntu-incus-dev-4gb-fsn1-1:~# /opt/incus/bin/incus-benchmark launch --count 10 images:ubuntu/22.04

Test environment:

Server backend: incus

Server version: 0.4

Kernel: Linux

Kernel tecture: aarch64

Kernel version: 5.15.0-91-generic

Storage backend: zfs

Storage version: 2.1.5-1ubuntu6~22.04.2

Container backend: lxc

Container version: 5.0.3

Test variables:

Container count: 10

Container mode: unprivileged

Startup mode: normal startup

Image: images:ubuntu/22.04

Batches: 5

Batch size: 2

Remainder: 0

[Jan 18 01:49:45.417] Found image in local store: fe0be2b7f4d2b97330883f7516a3962524d2a37a9690397384d2bbf46d0da909

[Jan 18 01:49:45.417] Batch processing start

[Jan 18 01:49:52.104] Processed 2 containers in 6.688s (0.299/s)

[Jan 18 01:49:59.088] Processed 4 containers in 13.671s (0.293/s)

[Jan 18 01:50:12.628] Processed 8 containers in 27.212s (0.294/s)

[Jan 18 01:50:20.227] Batch processing completed in 34.810s

root@ubuntu-incus-dev-4gb-fsn1-1:~# free -h

total used free shared buff/cache available

Mem: 3.7Gi 1.8Gi 998Mi 5.0Mi 958Mi 1.7Gi

Swap: 0B 0B 0B

root@ubuntu-incus-dev-4gb-fsn1-1:~#

Conclusion

We have tested out Incus on a Hetzner 4GB RAM ARM64 server. The storage pool was on a volume, which should be slower than putting it on the server itself.

Still, managed to effortlessy fit 50 Alpine containers with 280MB RAM to spare. Or, 10 Ubuntu containers with 1GB RAM to spare. This is the benefit of Incus. You get a cloud server, and you can easily put in there so many containers.